Dominic DiFranzo

Assistant Professor

Computer Science & Engineering

Lehigh University

- EMAIL : djd219@lehigh.edu

I am a an Assistant Professor in the Department of Computer Science and Engineering at Lehigh University. My research in human computer interaction translates established social science theories into design interventions that encourages social media users to stand up to cyberbullies, fact check fake news stories, and engage in other prosocial actions. In my effort to better implement and test these design interventions, I also developed new experimental tools and methods that create ecologically valid social media simulations, giving researchers control of both the technical interface and social situations found on social media platforms. My research has been published in numerous conferences and journals, including the ACM CHI Conference, the ACM CSCW Conference, the International World Wide Web Conference, and the ACM Web Sci Conference. I hold a PhD in Computer Science from the Rensselaer Polytechnic Institute and was a member of the Tetherless World Constellation. I was also a Post-Doctoral Associate in the Social Media Lab at Cornell University.

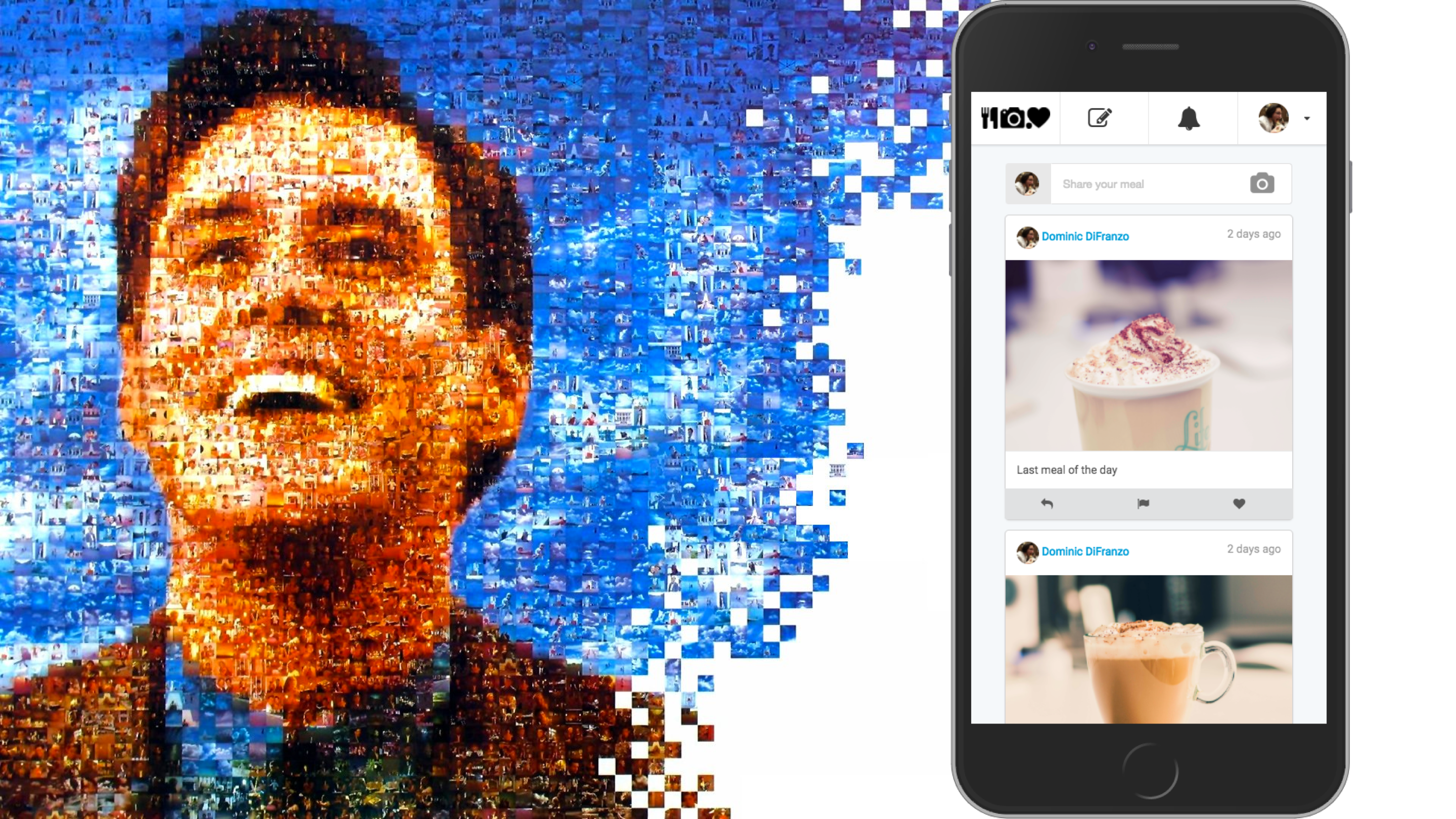

Truman is a complete social media simulation platform for experimental research. It creates a custom social media site where every user, post, like, reply, notification, and interaction can be created, curated and controlled by the research team. This social media simulation platform (named Truman after the 1998 film, The Truman Show) can create a controlled social media experience for participants. In this way, each participant is completely "alone" in this social media site, as every user they interact with is an actor being played by a "bot". This allows for complete control of the social situations and actions that take place on the platform, as every participant experiences the exact same social media experience. The Truman platform also allows for complete replication of any study as all the code, data, and media necessary to run this social media simulation is freely available on a public GitHub repository. Truman is an open source platform that is freely available for other researchers to use and build upon for their own studies.

See on GitHub

Social Media TestDrive is a educational platform that lets kids practice being online while ensuring their mistakes won’t come back to haunt them, online or off. The training simulation (built off the Truman Platform) includes a number of lesson plans to teach social media skills to kids. It has been used by over 800,000 middle school students acorss the US.

See Website Try a Lesson See on GitHub

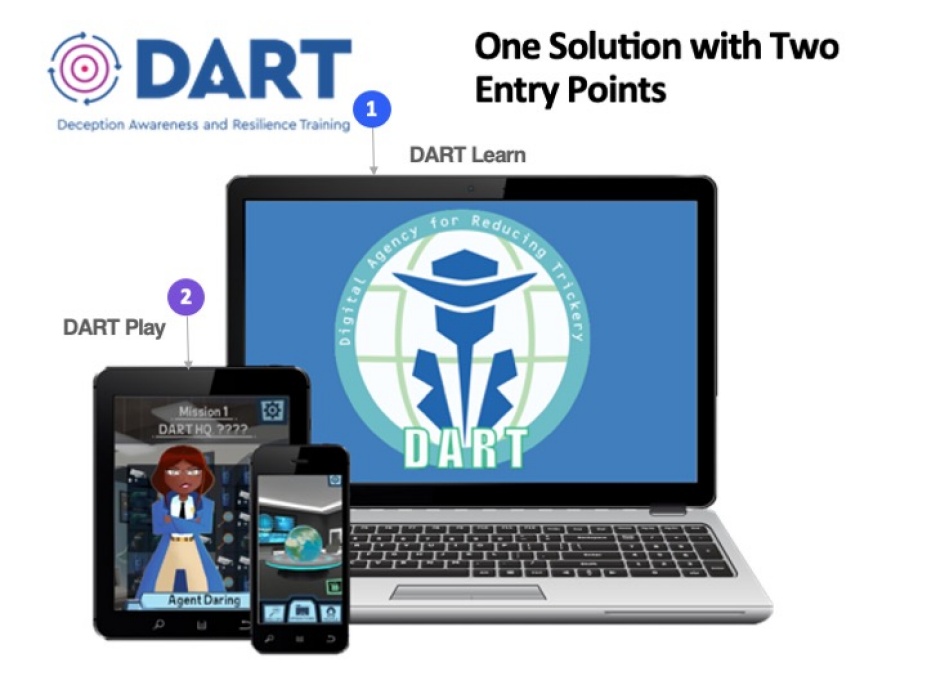

DART is a cross-disciplinary, multi-institutional, user-centered effort to develop games and a learing platform to help older adults recognize and navigate scams. The Deception Awareness and Resilience Training (DART) platform aims to fill the gap in the lack of appropriate resources and training for older populations. A collaboration between researchers, game designers, and community organizations, our platform is unique in tailoring its curriculum and using gamification to make training accessible and engaging for seniors. The overarching goal of our DART project is the development of a research/educational platform with integrated digital tools, advanced pedagogical techniques, and timely materials to increase disinformation awareness and improve resilience, to inoculate users against the impact of harmful disinformation, and prevent its spread.

See Site Play Demo See Video

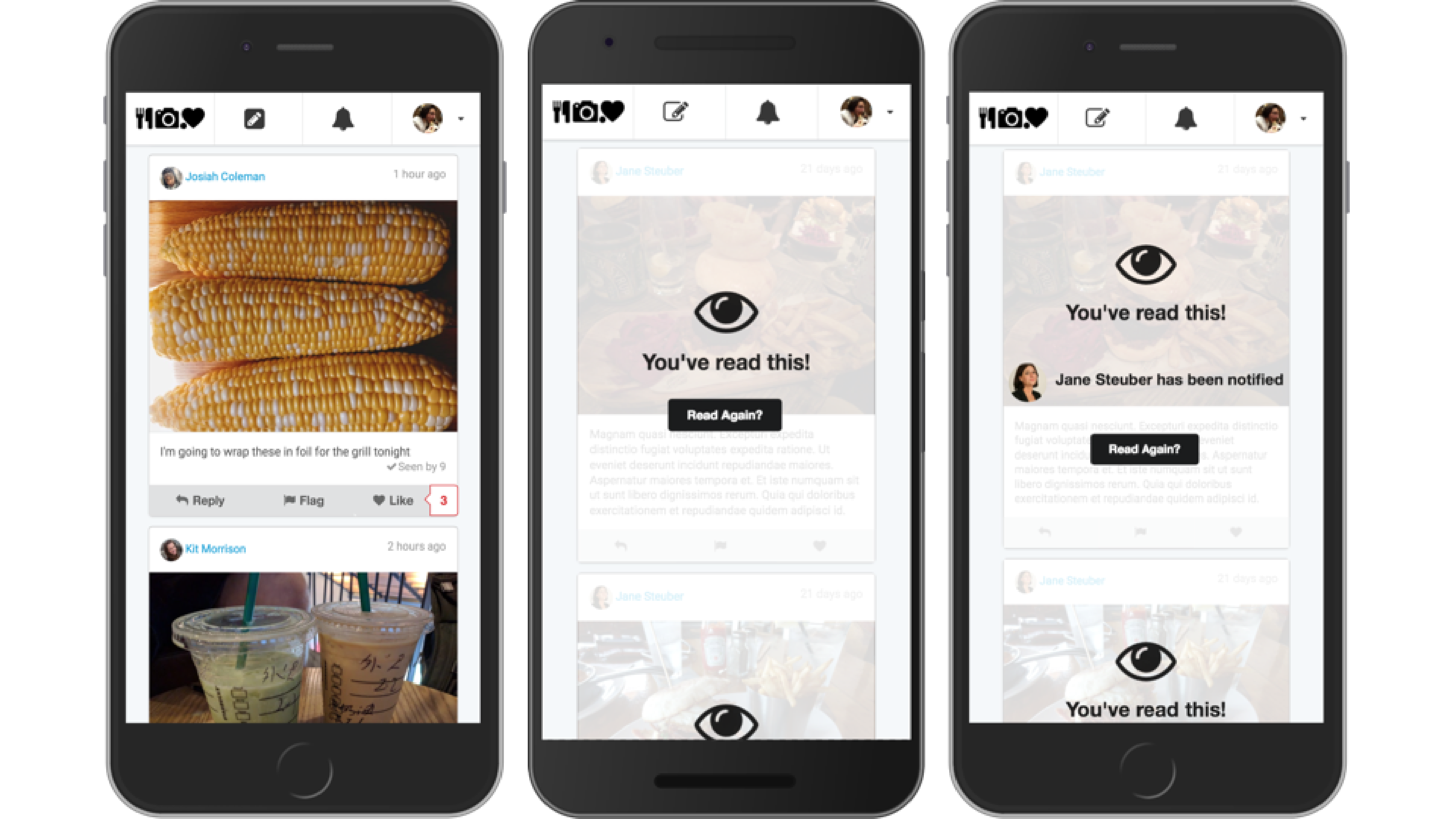

Although bystander intervention can mitigate the negative effects of cyberbullying, few bystanders ever attempt to intervene. In this study, we explored the effects of interface design on bystander intervention using a simulated custom-made social media platform. Participants took part in a three-day, in-situ experiment, in which they were exposed to several cyberbullying incidents. Depending on the experimental condition, they received different information about the audience size and viewing notifications intended to increase a sense of personal responsibility in bystanders. Results indicated that bystanders were more likely to intervene indirectly than directly, and information about the audience size and viewership increased the likelihood of flagging cyberbullying posts through serial mediation of public surveillance, accountability, and personal responsibility. The study has implications for understanding bystander effect in cyberbullying, and how to develop design solutions to encourage bystander intervention in social media.

See Paper See on GitHub

This research aims to explore AI system's impact on inidviduals, groups and communities. We have run studies that have explored how personification of AI agents affects people’s sense of privacy violation, how smart reply systems affect group communication in chatting apps, and how AI-based social media moderation systems are perceived differ-ently than human-based ones.

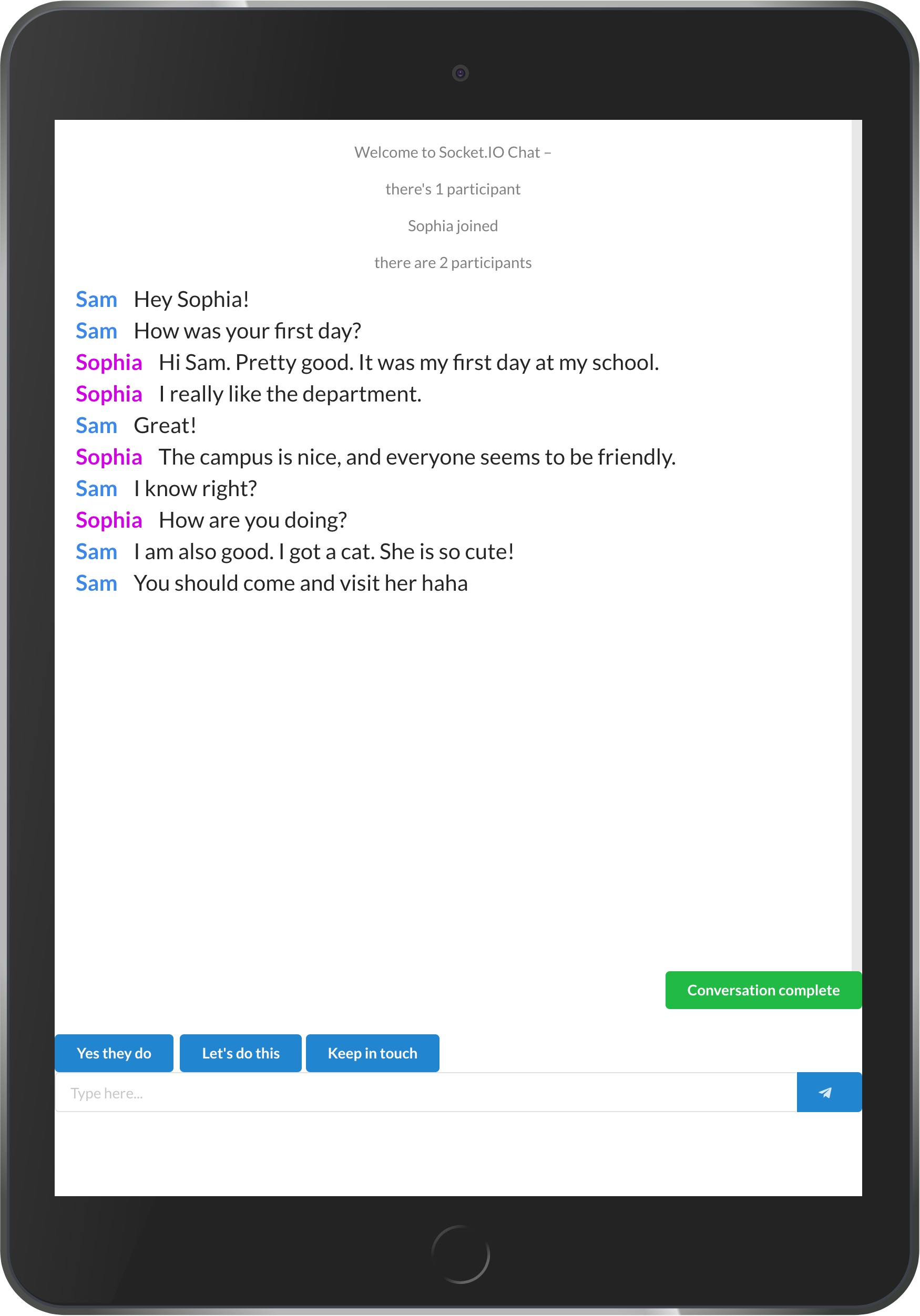

Moshi is a web-based research platform that allows researchers to engage online participants in text-based, real-time interpersonal communication. Moshi provides a modular scaffold for experiments on text based AI-MC, allowing other researchers to modify it and explore their own research questions. The interface is reactive to device type and resizes itself to work well on desktops, tablets, and mobile devices (e.g., Android and iOS). In addition to the standard text box to send messages, participants can also receive smart replies that they can click or tap to send automatically. Similar to existing chat apps, users can also scroll to see the history of the conversation at any point.

Read the Paper See on GitHub

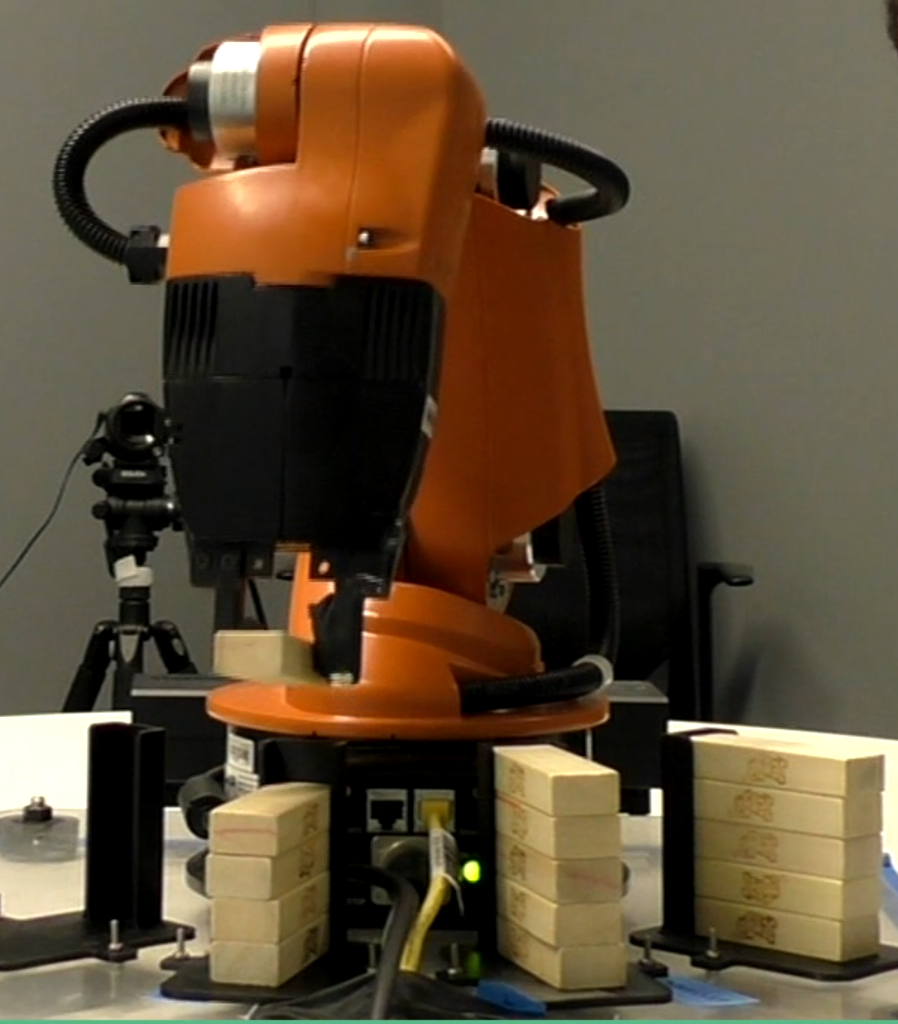

This research aims to explore whether robots can shape interpersonal dynamics between people through simple task-related behavior. Autonomous robots are increasingly used to support work in groups and teams but it is unclear how a robot's behavior shapes the way people perceive and interact with each other. The proposed will examine a robot assisted assembly setting in which a robot delivers parts to two or more humans working on an assembly task. By systematically varying the ways in which the robot delivers the parts we seek to gain understanding how the robot's behavior influences interaction dynamics between group members.

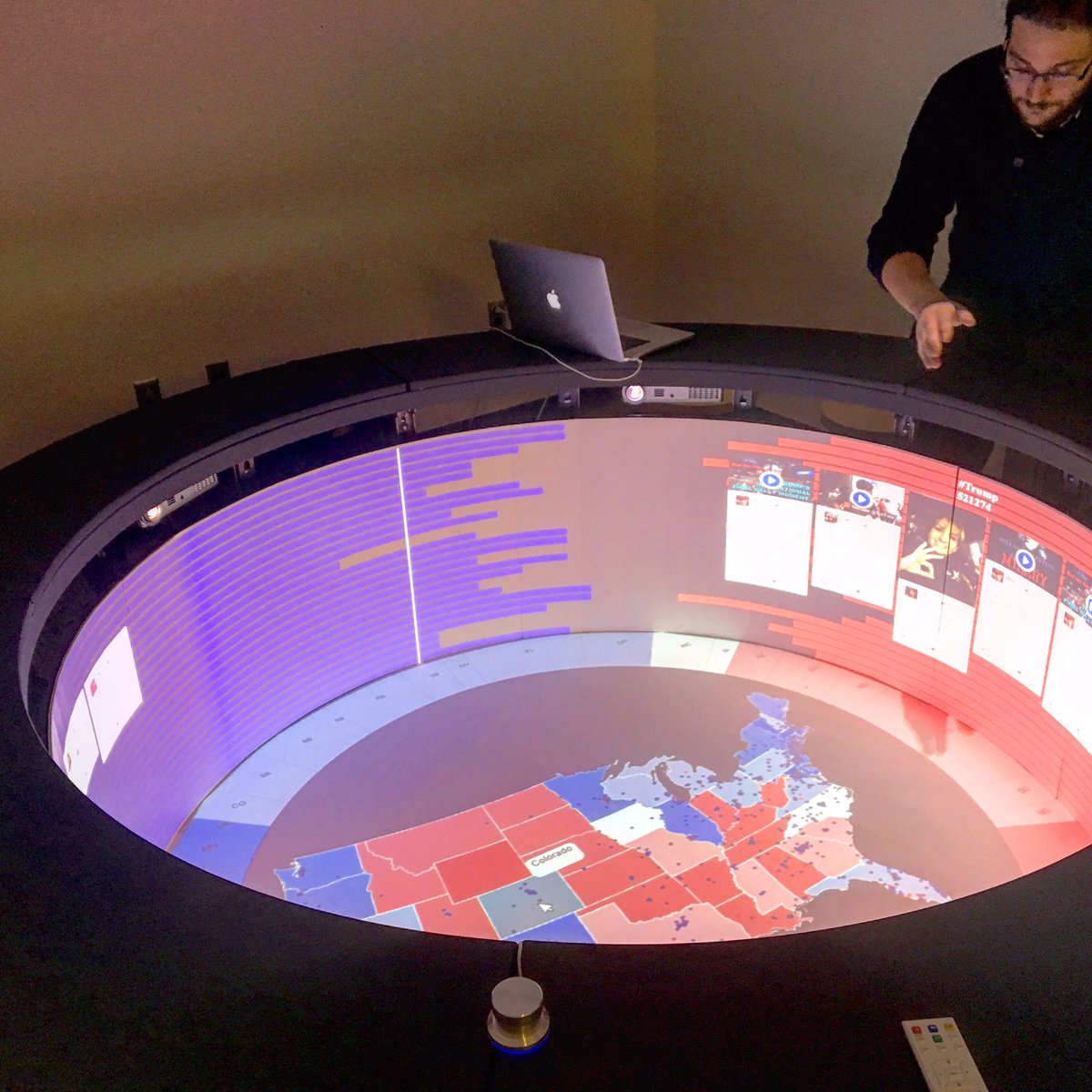

As part of the EPSRC funded project SOCIAM, I built a real-time data visualization that combined traditional polling data with social media posts for the 2016 UK and US Elections. The application was built and designed for the Rensselaer Polytechnic Institute EMPAC Campfire, a novel multi-user, collaborative, immersive, computing interface that consist of a desk height panoramic screen and floor projection that users gather around and look into. This visualization system allows groups of users, in real time, explore the local social media conversations taking place about the elections, while also exploring the historical polling and option data.

Video on YouTubeCode on GitHub

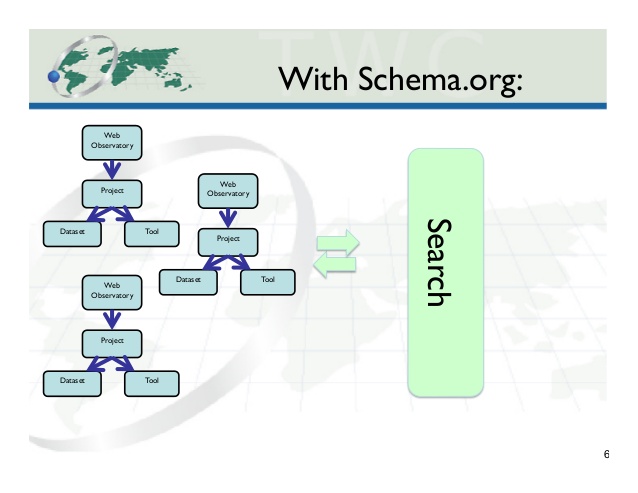

The multi-disciplinary nature of Web Science and the large size and diversity of data collected and studied by its practitioners has inspired a new type of Web resource known as the Web Observatory. Web observatories are platforms that enable researchers to collect, analyze and share data about the Web and to share tools for Web research. In this effort, I helped to create a semantic model for describing Web Observatories as an extension to the schema.org microdata vocabulary collection. This model helps enables Web Observatory curators to better expose and explain their individual Web Observatories to others, thereby enabling better collaboration between researchers across the Web Science community.

Documentation Webinar on Web Observatories

Virtual worlds present a natural test bed to observe and study the social behaviors of people at a large scale. Analyzing the rich "big data" generated by the activities of players in a virtual world enables us to better understand the online society, to validate and propose sociological theories, and to provide insights of how people behave in the real world. However, how to better store and analyze such complex big data has always been an issue that prevents in-depth analyses. In this work we explored how how Semantic Web technologies to address these issues, and explained certain social concepts expressed within a massively multiplayer online game, EverQuest II.

Paper

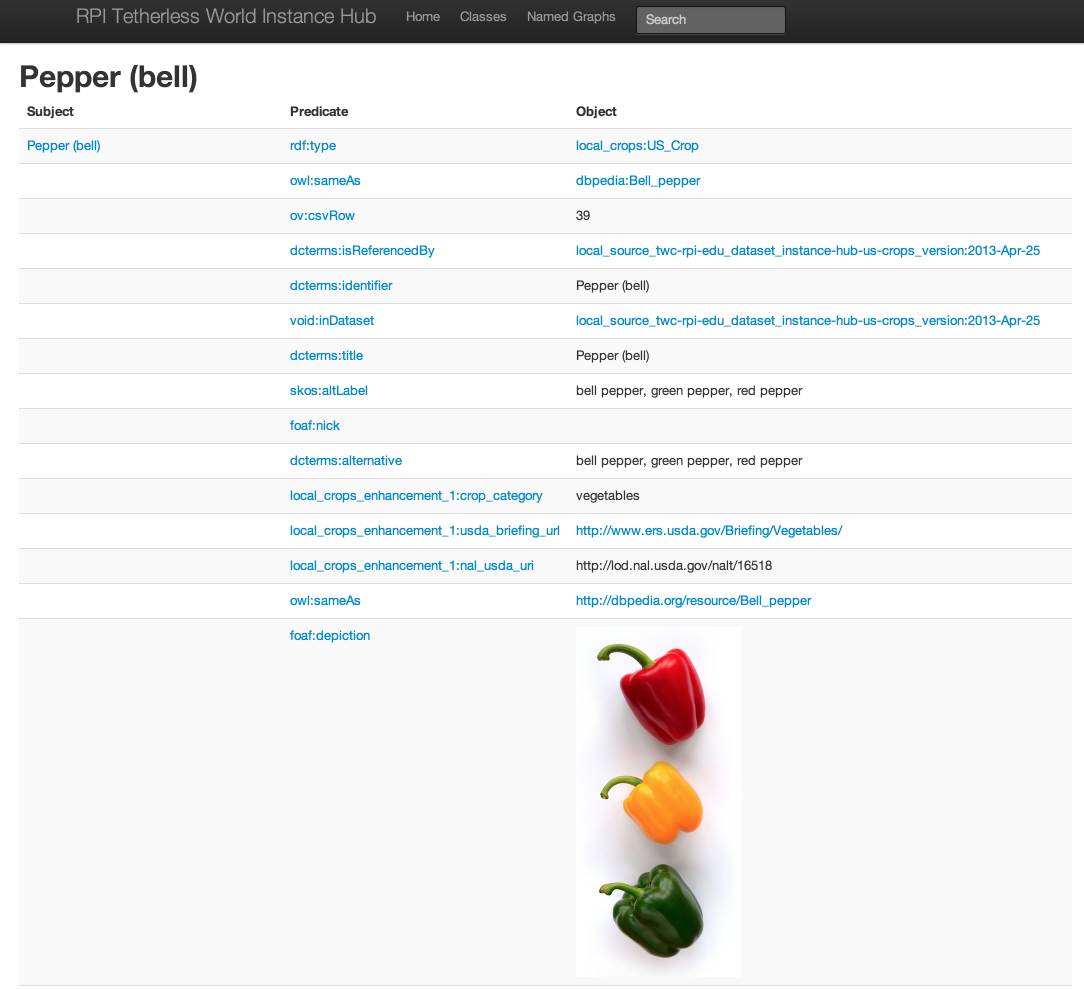

On the Semantic Web there is a need for ways to express authoritative references to entities, and to do so in such a way that is descriptive of those entities both for computers and humans. The Instance Hub provides a way of doing this for collections of entities in related categories, e.g.: countries, US states, US government agencies, crops, toxic chemicals, etc. A URI (uniform resource identifier) is a string of characters that identifies a name or a resource on the web (ie:http://logd.tw.rpi.edu/id/us/state/New_York). The Instance Hub creates URI standards for common concepts in open government data, and creates a interface to search and display them. Users can logically navigate the hierarchy of URIs in the Instance Hub, viewing categorical information at varying levels of specificity.

Data Files

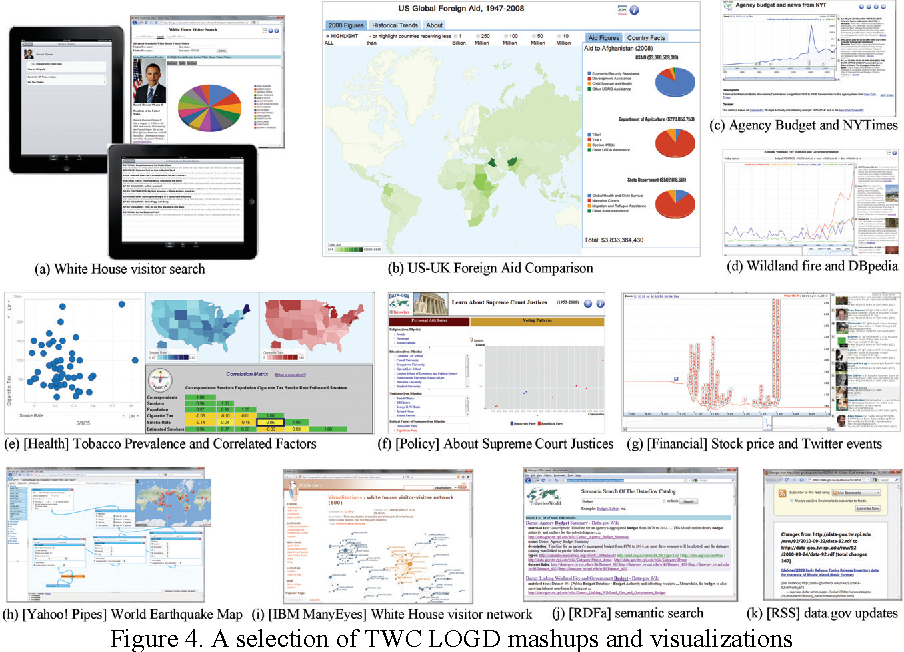

The LOGD project investigates the role of Semantic Web technologies, especially Linked Data, in producing, enhancing and utilizing government data published on Data.gov and other websites. Large portion of government data published on the Web are not necessarily ready for mashups. The Tetherless World Constellation (TWC) is now publishing over 8 billions RDF triples converted from hundreds of government-related datasets from Data.gov and other sources (e.g. financial data, non-US government data) covering a wide range of topics (e.g. government spending, energy usage and public healthcare). We are also get these data inter-linked and building mashups using popular Web tools and APIs. Data and Mashup Visualizations from this project have been featured on Data.gov and WhiteHouse.gov.

See on Data.gov Website

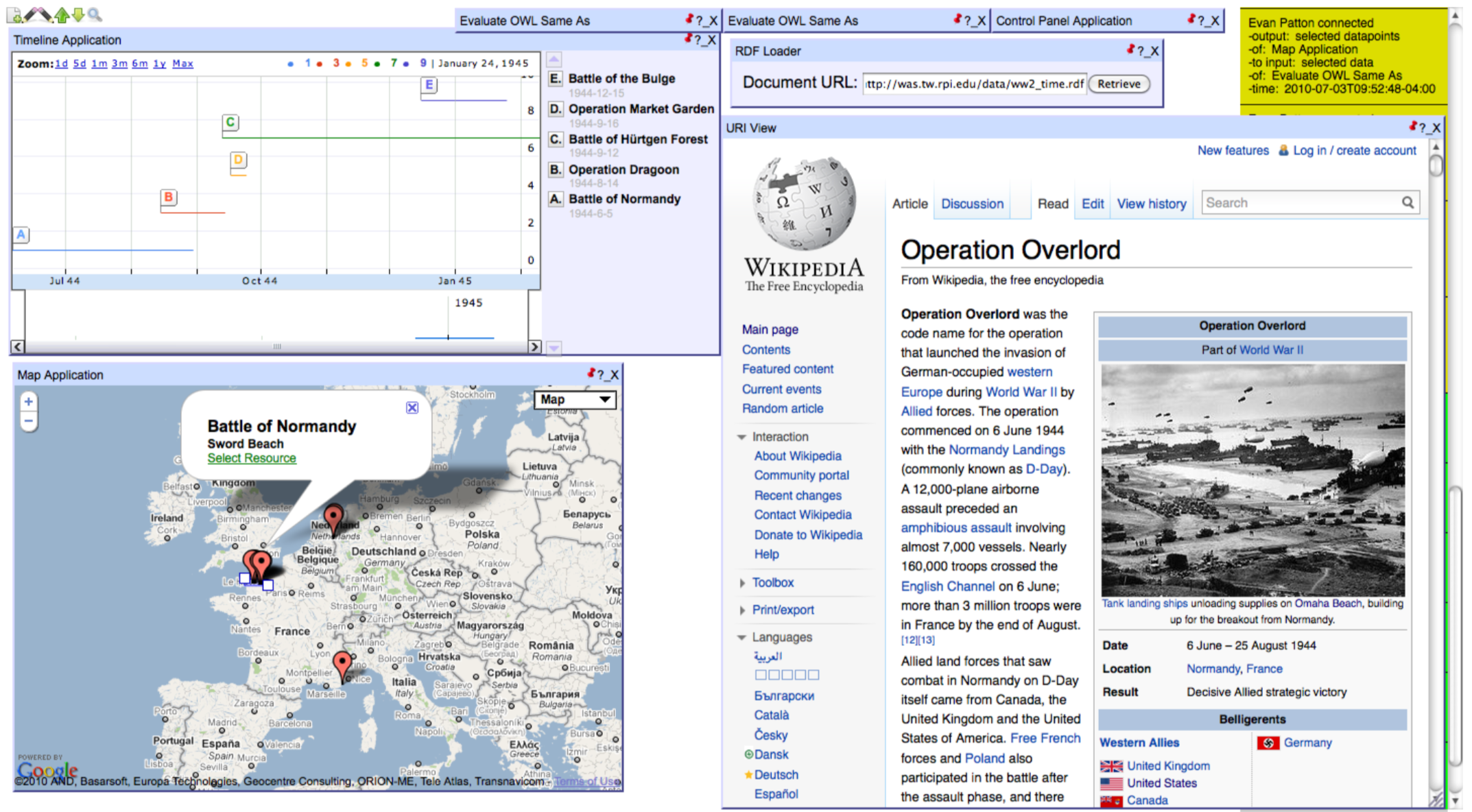

The Semantic Application Framework (SAF) is a web application that allows users to reuse RDF content from existing sites (e.g., DBpedia), extract data from the social networks like Facebook and Twitter, and manipulate those data through JavaScript applets. Users combine these applets for processing and vi- sualizing data in a Graphical User Interface, similar to scientific work- flow systems. Unlike workflow systems, users are encouraged to view and interact with data interactively and manipulate it in an exploratory fashion. User-constructed applet pipelines are encoded as RDF files, al- lowing publication and collaboration, thus taking a critical step forward in moving to an end-user programmable social machine environment.